In The News

ModalAI Helps Make Greenhouse Profits Bloom!

Innervating Smaller, Smarter, Safer…and Bluer Robots and Drones

The Blue sUAS Framework: A Conversation with ModalAI

ModalAI Named Winner in 2021 Artificial Intelligence Excellence Awards

Dronecode Foundation Announces Appointment of New Board Directors

DRONECODE MEMBERS MODALAI AND AIRMAP RECEIVE U.S. DOD GRANT TO SUPPORT THE DOMESTIC SUAS INDUSTRIAL BASE.

Qualcomm's 5G RB5 robotics platform will help drones navigate tight spaces

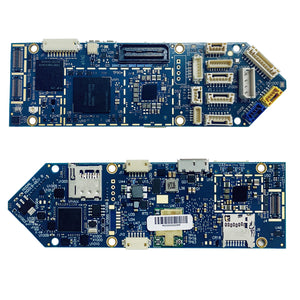

VOXL Flight® Receives Press Coverage

VOXL from ModalAI Contributes to Uber Eats Drone Delivery Testing

Dronecode welcomes Microsoft, ModalAI, and Teal as new members